Integration with Glue + AWS S3 Tables

AWS S3 Tables is a special type of S3 Bucket that provides read and write interfaces compatible with Apache Iceberg table format standards, built on Amazon S3, offering the same durability, availability, scalability, and performance characteristics as S3 itself. Additionally, S3 Tables provides the following features:

- Compared to Iceberg tables stored in regular S3 Buckets, S3 Tables can deliver up to 3x higher query performance and up to 10x higher transactions per second.

- Automated table management. S3 Tables automatically optimizes Iceberg table data, including small file compaction, snapshot management, and garbage file cleanup.

The release of S3 Tables further simplifies Lakehouse architecture and brings more possibilities for cloud-native lake-warehouse systems. This includes cold-hot separation, data archiving, data backup, and compute-storage separation architectures, all of which could evolve into entirely new architectures based on S3 Tables.

Thanks to Amazon S3 Tables' high compatibility with the Iceberg API, Apache Doris can quickly integrate with S3 Tables. This article will demonstrate how to connect Apache Doris with S3 Tables and perform data analysis and processing.

This feature is supported since Doris 3.1

Usage Guide

01 Create S3 Table Bucket

S3 Table Bucket is the third type of Bucket launched by S3, on par with the previous General purpose bucket and Directory bucket.

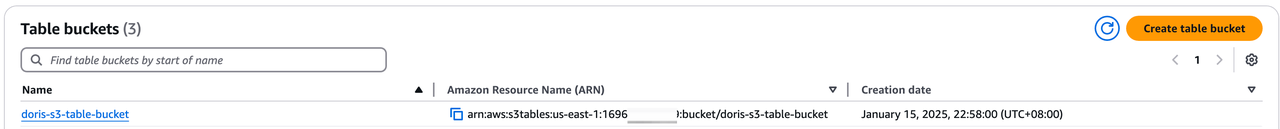

Here we create a Table Bucket named doris-s3-table-bucket. After creation, we will get a Table Bucket represented by an ARN.

02 Create Iceberg Catalog

-

Connecting to

s3 tablesusing AWS S3 Table Rest CatalogCREATE CATALOG aws_s3_tables PROPERTIES (

'type' = 'iceberg',

'iceberg.catalog.type' = 'rest',

'warehouse' = 'arn:aws:s3tables:us-east-1:<account_id>:bucket/<s3_table_bucket_name>',

'iceberg.rest.uri' = 'https://s3tables.us-east-1.amazonaws.com/iceberg',

'iceberg.rest.sigv4-enabled' = 'true',

'iceberg.rest.signing-name' = 's3tables',

'iceberg.rest.signing-region' = 'us-east-1',

'iceberg.rest.access-key-id' = '<ak>',

'iceberg.rest.secret-access-key' = '<sk>'

); -

Connecting to

s3 tablesusing Glue Rest CatalogCREATE CATALOG glue_s3 PROPERTIES (

'type' = 'iceberg',

'iceberg.catalog.type' = 'rest',

'iceberg.rest.uri' = 'https://glue.<region>.amazonaws.com/iceberg',

'warehouse' = '<acount_id>:s3tablescatalog/<s3_table_bucket_name>',

'iceberg.rest.sigv4-enabled' = 'true',

'iceberg.rest.signing-name' = 'glue',

'iceberg.rest.access-key-id' = '<ak>',

'iceberg.rest.secret-access-key' = '<sk>',

'iceberg.rest.signing-region' = '<region>'

);

03 Access S3Tables

Doris > SWITCH iceberg_s3;

Doris > SHOW DATABASES;

+--------------------+

| Database |

+--------------------+

| information_schema |

| my_namespace |

| mysql |

+--------------------+

Doris > USE my_namespace;

Doris > SHOW TABLES;

+------------------------+

| Tables_in_my_namespace |

+------------------------+

| my_table |

+------------------------+

Doris > SELECT * FROM my_table;

+------+------+-------+

| id | name | value |

+------+------+-------+

| 1 | ABC | 100 |

| 2 | XYZ | 200 |

+------+------+-------+

04 Create S3Tables Table and Write Data

Doris > CREATE TABLE partition_table (

-> `ts` DATETIME COMMENT 'ts',

-> `id` INT COMMENT 'col1',

-> `pt1` STRING COMMENT 'pt1',

-> `pt2` STRING COMMENT 'pt2'

-> )

-> PARTITION BY LIST (day(ts), pt1, pt2) ();

Doris > INSERT INTO partition_table VALUES

-> ("2024-01-01 08:00:00", 1000, "us-east", "PART1"),

-> ("2024-01-02 10:00:00", 1002, "us-sout", "PART2");

Query OK, 2 rows affected

{'status':'COMMITTED', 'txnId':'1736935786473'}

Doris > SELECT * FROM partition_table;

+----------------------------+------+---------+-------+

| ts | id | pt1 | pt2 |

+----------------------------+------+---------+-------+

| 2024-01-02 10:00:00.000000 | 1002 | us-sout | PART2 |

| 2024-01-01 08:00:00.000000 | 1000 | us-east | PART1 |

+----------------------------+------+---------+-------+

05 Time Travel

We can insert another batch of data, then use the $snapshots system table to view Iceberg Snapshots:

Doris > INSERT INTO partition_table VALUES

-> ("2024-01-03 08:00:00", 1000, "us-east", "PART1"),

-> ("2024-01-04 10:00:00", 1002, "us-sout", "PART2");

Query OK, 2 rows affected (9.76 sec)

{'status':'COMMITTED', 'txnId':'1736935786474'}

Doris > SELECT * FROM partition_table$snapshots\G

*************************** 1. row ***************************

committed_at: 2025-01-15 23:27:01

snapshot_id: 6834769222601914216

parent_id: -1

operation: append

manifest_list: s3://80afcb3f-6edf-46f2-7fhehwj6cengfwc7n6iz7ipzakd7quse1b--table-s3/metadata/snap-6834769222601914216-1-a6b2230d-fc0d-4c1d-8f20-94bb798f27b1.avro

summary: {"added-data-files":"2","added-records":"2","added-files-size":"5152","changed-partition-count":"2","total-records":"2","total-files-size":"5152","total-data-files":"2","total-delete-files":"0","total-position-deletes":"0","total-equality-deletes":"0","iceberg-version":"Apache Iceberg 1.6.1 (commit 8e9d59d299be42b0bca9461457cd1e95dbaad086)"}

*************************** 2. row ***************************

committed_at: 2025-01-15 23:30:00

snapshot_id: 5670090782912867298

parent_id: 6834769222601914216

operation: append

manifest_list: s3://80afcb3f-6edf-46f2-7fhehwj6cengfwc7n6iz7ipzakd7quse1b--table-s3/metadata/snap-5670090782912867298-1-beeed339-be96-4710-858b-f39bb01cc3ff.avro

summary: {"added-data-files":"2","added-records":"2","added-files-size":"5152","changed-partition-count":"2","total-records":"4","total-files-size":"10304","total-data-files":"4","total-delete-files":"0","total-position-deletes":"0","total-equality-deletes":"0","iceberg-version":"Apache Iceberg 1.6.1 (commit 8e9d59d299be42b0bca9461457cd1e95dbaad086)"}

Use the VERSION AS OF syntax to query different snapshots:

Doris > SELECT * FROM partition_table FOR VERSION AS OF 5670090782912867298;

+----------------------------+------+---------+-------+

| ts | id | pt1 | pt2 |

+----------------------------+------+---------+-------+

| 2024-01-04 10:00:00.000000 | 1002 | us-sout | PART2 |

| 2024-01-03 08:00:00.000000 | 1000 | us-east | PART1 |

| 2024-01-01 08:00:00.000000 | 1000 | us-east | PART1 |

| 2024-01-02 10:00:00.000000 | 1002 | us-sout | PART2 |

+----------------------------+------+---------+-------+

Doris > SELECT * FROM partition_table FOR VERSION AS OF 6834769222601914216;

+----------------------------+------+---------+-------+

| ts | id | pt1 | pt2 |

+----------------------------+------+---------+-------+

| 2024-01-02 10:00:00.000000 | 1002 | us-sout | PART2 |

| 2024-01-01 08:00:00.000000 | 1000 | us-east | PART1 |

+----------------------------+------+---------+-------+

06 Access S3 Tables Using EMR Spark

Data written using Doris can also be accessed using Spark:

spark-shell --jars /usr/share/aws/iceberg/lib//iceberg-spark-runtime-3.5_2.12-1.6.1-amzn-1.jar \

--packages software.amazon.s3tables:s3-tables-catalog-for-iceberg-runtime:0.1.3 \

--conf spark.sql.catalog.s3tablesbucket=org.apache.iceberg.spark.SparkCatalog \

--conf spark.sql.catalog.s3tablesbucket.catalog-impl=software.amazon.s3tables.iceberg.S3TablesCatalog \

--conf spark.sql.catalog.s3tablesbucket.warehouse=arn:aws:s3tables:us-east-1:169698000000:bucket/doris-s3-table-bucket \

--conf spark.sql.defaultCatalog=s3tablesbucket \

--conf spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions

scala> spark.sql("SELECT * FROM s3tablesbucket.my_namespace.`partition_table` ").show()

+-------------------+----+-------+-----+

| ts| id| pt1| pt2|

+-------------------+----+-------+-----+

|2024-01-02 10:00:00|1002|us-sout|PART2|

|2024-01-01 08:00:00|1000|us-east|PART1|

|2024-01-04 10:00:00|1002|us-sout|PART2|

|2024-01-03 08:00:00|1000|us-east|PART1|

+-------------------+----+-------+-----+